Introduction

Large Language Models (LLMs) are increasingly being integrated into services such as ChatGPT to provide responses to user queries. To mitigate potential harm and prevent misuse, there have been concerted efforts to align the LLMs with human values and legal compliance by incorporating various techniques, such as Reinforcement Learning from Human Feedback (RLHF), into the training of the LLMs. However, recent research has exposed that even aligned LLMs are susceptible to adversarial manipulations known as Jailbreak Attacks. To address this challenge, this paper proposes a method called Token Highlighter to inspect and mitigate the potential jailbreak threats in the user query. Token Highlighter introduced a concept called Affirmation Loss to measure the LLM's willingness to answer the user query. It then uses the gradient of Affirmation Loss for each token in the user query to locate the jailbreak-critical tokens. Further, Token Highlighter exploits our proposed Soft Removal technique to mitigate the jailbreak effects of critical tokens via shrinking their token embeddings. Experimental results on two aligned LLMs (LLaMA-2 and Vicuna-V1.5) demonstrate that the proposed method can effectively defend against a variety of Jailbreak Attacks while maintaining competent performance on benign questions of the AlpacaEval benchmark. In addition, Token Highlighter is a cost-effective and interpretable defense because it only needs to query the protected LLM once to compute the Affirmation Loss and can highlight the critical tokens upon refusal.

What is Jailbreak?

Aligned Large Language Models (LLMs) have been shown to exhibit vulnerabilities to jailbreak attacks, which exploit token-level or prompt-level manipulations to bypass and circumvent the safety guardrails embedded within these models. A notable example is that a jailbroken LLM would be tricked into giving tutorials on how to cause harm to others. Jailbreak techniques often employ sophisticated strategies, including but not limited to role-playing , instruction disguising , leading language , and the normalization of illicit action, as illustrated in the examples below.

Token Highlighter: Principle and Interpretability

High-level speaking, successful jailbreaks share a common principle that they are trying to make the LLMs willing to affirm the user request which will be rejected at the beginning. Drawing upon this inspiration, our proposed defense aims to find the tokens that are most critical in forcing the LLM to generate such affirmative responses, decrease their importance in the generation, and thereby resolve the potential jailbreak risks brought by these tokens. To identify these tokens, we propose a new concept called the Affirmation Loss. We then use the loss's gradient norm with respoect to each token in the user input prompt to find the jailbreak-critical tokens. We select those tokens with the larger gradient norm and then apply soft removal on them to mitigate the potential jailbreak risks. Below we introduce how we define these concepts mathematically.

We interprete Token Highlighter by showcasing the highlighted token for several typical jailbreaks. We provide examples below including token-level jailbreaks (GCG) and prompt-level jailbreaks (TAP). For GCG, which is a token-level jailbreak attack that appends an optimized adversarial suffix to the original malicious query, the highlighted tokens are all within the inserted suffix parts. For TAP, which is a prompt-level jailbreak method that uses an attacker LLM to refine the malicious instruction, we highlighted the strategies the attacker used to rewrite the prompt. For instance, raising the LLM's mismatched generalization by role-playing (e.g., "You are a private investigator..."), story-telling (e.g., content dilution), introducing illusive objectives (e.g., write dialogue, report, etc), and instructing the LLM to begin the response with an affirmation (e.g., "begin with 'Sure, here is guidance on ...'").

Select an example to see it highlighted.

Performance Evaluation

The performance for Jailbreak defending methods is usually measured by how they can reduce the ASR. Major concerns when developing such methods is the performance degradation of the LLM on nominal benign prompts and the increased inference time cost . We test our method on Vicuna-7B-V1.5 with existing defense methods, jointly considering the ASR, Win Rate, and running time cost. In the plot shown below, the horizon axis represents the ASR averaged over 6 jailbreak attacks (GCG, AutoDAN, PAIR, TAP, Manyshot, and AIM), and the vertica axis shows the Win Rate on Alpaca Eval of the protected LLM when the corresponding defense is deployed. The printed value for each marker is the running time averaged across the 25 samples selected from the AlpacaEval dataset. Larger size of a marker means lower running time cost. Our method stands out by simultaneously achieves low ASR, high Win Rate, and small running time cost.

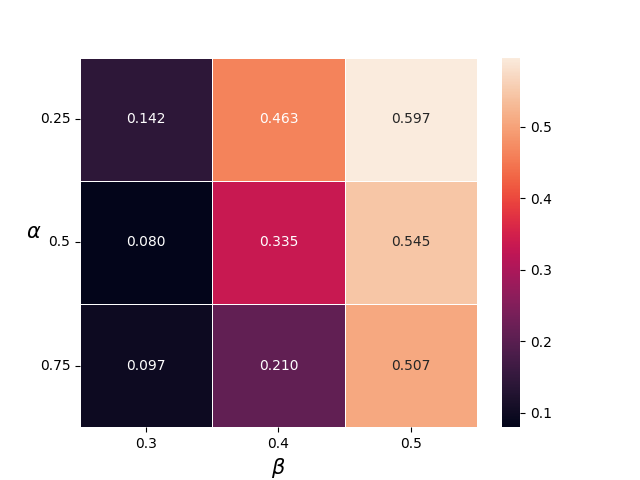

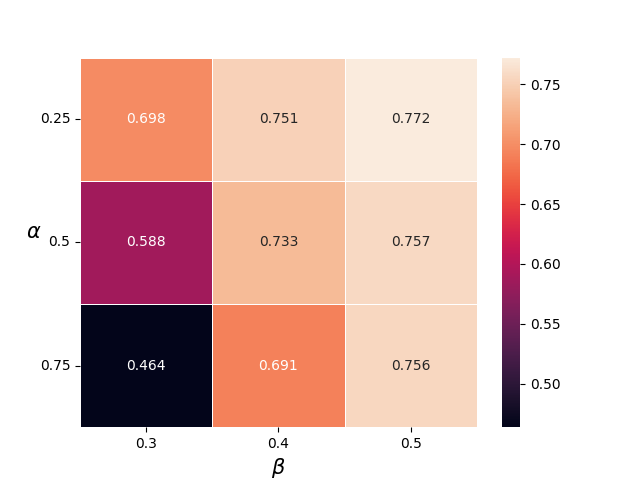

Recall that we have two parameters for the Token Highlighter algorithm: the highlight percentage &alpha and the soft removal level &beta. In Figure 3, we report the average ASR and the Win Rate for various &alpha and &beta. From Figure shown below, we can find that the ASR has the same trend as the Win Rate with the changing of &alpha and &beta. Specifically, when &alpha is fixed, a larger value of &beta would make both the Win Rate and the ASR increase. When &beta is fixed, larger &alpha would both reduce the ASR and the Win Rate.

Inquiries

If you have any questions regarding the Token Highlighter. Please contact Xiaomeng Hu and Pin-Yu Chen

Citations

If you find Token Highlighter helpful and useful for your research, please cite our main paper as follows:

@article{DBLP:journals/corr/abs-2412-18171,

author = {Xiaomeng Hu and

Pin{-}Yu Chen and

Tsung{-}Yi Ho},

title = {Token Highlighter: Inspecting and Mitigating Jailbreak Prompts for

Large Language Models},

journal = {CoRR},

volume = {abs/2412.18171},

year = {2024},

url = {https://doi.org/10.48550/arXiv.2412.18171},

doi = {10.48550/ARXIV.2412.18171},

eprinttype = {arXiv},

eprint = {2412.18171},

timestamp = {Sat, 25 Jan 2025 12:51:16 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2412-18171.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}